How to Protect Your Data in the Age of AI

Why This Matters

Artificial Intelligence is everywhere—it powers product recommendations, fraud detection, chatbots, and even drug discovery. But every AI system depends on the quality and security of its data. If a model is trained on stolen, poisoned, or poorly-governed data, it can:

- Trigger legal risks (GDPR fines, IP loss)

- Erode customer trust

- Produce incorrect or biased decisions

- Open the door to ransomware and brand damage

Bottom line: AI is only as strong as the security of the data that fuels it.

A 90-Second History of Data Storage

- Ancient era: Hieroglyphs, scrolls, libraries

- 1960s: Mainframes and early integrated data systems (IDS, IMS)

- 1970: Relational databases (pioneered by Edgar F. Codd at IBM)

- 1990s: Client/server sprawl, distributed systems

- 2006+: Public Cloud (AWS, Azure, GCP)

- Today: Hybrid-Cloud, Data Lakes, Lakehouses, Vector DBs

The infrastructure keeps evolving, but one truth remains: data must be stored, retrieved, and protected—no exceptions.

How AI Uses Your Data

- Raw logs, text, and images feed training pipelines

- Cleaned & engineered features live in Feature Stores and Vector DBs

- Models query enterprise data (RAG, embeddings) to serve end-users

- Output is written back to operational systems

Every hop is an extra attack surface.

The Modern Threat Landscape

While traditional risks still exist (breaches, ransomware, insider theft), AI introduces new ones:

- Data Poisoning: Corrupt training sets so the model misbehaves

- Model Extraction: Recreate a proprietary model via API queries

- Prompt/Query Injection: Smuggle malicious instructions through LLM inputs

- Privacy Leakage: Model regurgitates personal or confidential data

Six Golden Strategies for Data Protection

1. Data Classification

Identify and label Personal Identifiable Information (PII), Sensitive Personal Information (SPI), Intellectual Property (IP), and public data.

Protection level flows directly from classification.

2. Access Management

- No direct database logins—always go through an abstraction layer (API, service, role).

- Read-only wherever possible.

- Enforce “least privilege”: minimum rights needed to do the job.

- Multi-Factor Authentication (MFA) + centralized Identity & Access Management (IAM) for humans and machines.

3. Governance for Privileged Users & Apps

- Ban shared or generic admin accounts.

- Store secrets in a Vault (AWS Secrets Manager, HashiCorp Vault, Azure Key Vault). Rotate frequently.

- Continuous monitoring and anomaly detection: unusual time-of-day logins, massive exports, privilege escalation.

4. Encryption Everywhere

- Data-at-Rest: AES-256 (disk, S3 buckets, database files).

- Data-in-Transit: TLS 1.3 for APIs, VPN or private link for service-to-service traffic.

- Separate duties: database admins do NOT hold the encryption keys; a dedicated Key Management Service (KMS) does.

5. Monitoring & Anomaly Detection

- Aggregate logs into SIEM/SOAR.

- Use baselines + machine learning to spot odd behavior (exfiltration, credential misuse, poisoning attempts).

- Tie monitoring depth to data sensitivity and business risk.

6. Continuous Review & Governance

- Quarterly entitlement reviews: does Alice still need access now that she changed teams?

- Update classifications when new data sources appear.

- Pen-tests, red-team drills, table-top exercises, and bug-bounty programs.

- Align with legal frameworks—GDPR, CCPA, HIPAA, Moroccan Law 09-08, ISO 27001.

Putting It All Together – A Mini Case Study

Scenario: A Moroccan e-commerce start-up launches an AI chatbot.

| Data Source | Sensitivity | Protection Blueprint |

|---|---|---|

| Product stock | Low (Public) | Chatbot gets “read_stock” only; cannot touch payment data |

| Customer profiles, payments | High (SPI) | Payment tokens tagged as SPI; encrypted with AES-256, keys in AWS KMS; SIEM alerts for >1MB SPI egress |

- Monitoring: SIEM rule alerts if >1 MB of SPI leaves the VPC or if the chatbot role is used outside office hours

- Secrets: Monthly secret rotation via HashiCorp Vault

Result: Secure user experience, regulatory compliance, reduced blast radius.

The Takeaway

Artificial Intelligence can 10× your innovation, but only if the data behind it is trustworthy and secure. Adopt the six strategies—classify, control access, govern privileged users, encrypt, monitor, and review—and bake them into daily operations, not one-off checklists. Share knowledge visually to align executives, engineers, and customers.

Secure data → Trustworthy AI → Competitive advantage. Start today.

Tags

Related Articles

The Compound Effect in Programming & AI: How Small Improvements Lead to Big Results

Discover how the compound effect—small, consistent improvements—transforms programming, AI, and learning. Learn strategies for exponential growth in tech.

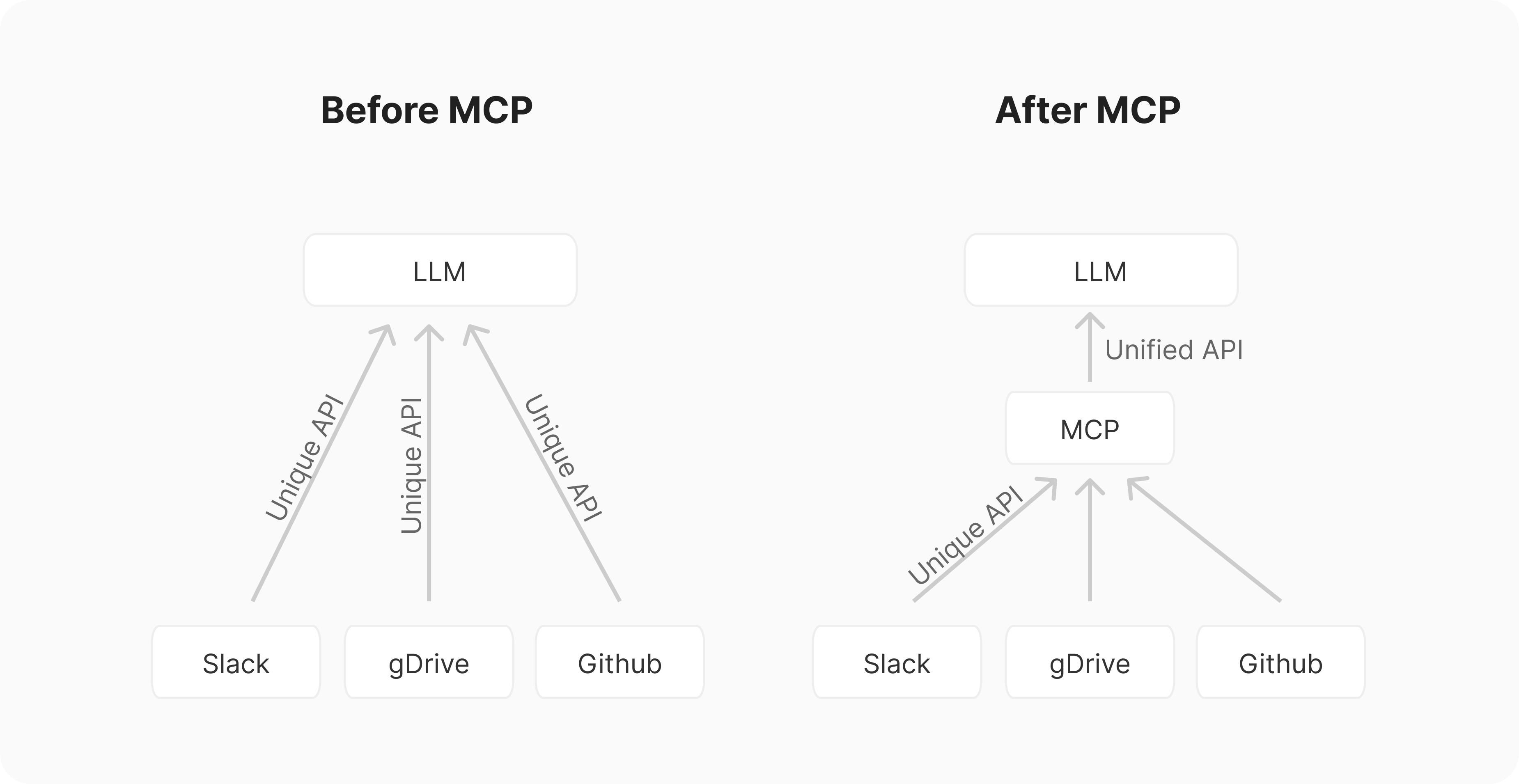

The Model Context Protocol: The New Standard for AI Integration

Explore the Model Context Protocol (MCP), the revolutionary open standard that's transforming how AI systems connect with external data sources and tools. Learn how MCP is solving the M×N integration problem and enabling seamless AI applications.

The Complete Guide to OpenAI API: A Practical Developer's Handbook

Explore a complete guide to the OpenAI API, covering everything from setup and core functionality to advanced features, performance optimization, error handling, and practical use cases. Perfect for developers looking to integrate and leverage OpenAI models effectively.

Python Playground: A Fun, Project-Based Guide to Learning Python for Generative AI

Learn Python through hands-on projects! This beginner-friendly guide walks you through building an AI-powered text adventure game while mastering Python fundamentals in a fun and engaging way.

About the Author

Hafida Belayd

A passionate Data Analyst and technologist exploring the intersections of data and creativity.